006 📧 - Big AI News in Generative Art from Ludwig Maximilian University of Munich

Yesterday, the group behind another impressive model called Latent Diffusion Model release the full source code along with the trained weight of their model!

Hi Everyone!

In today's short issue I want to briefly discuss a massive new development in the generative art field.

These past few months have been pretty intense with the release of DALL-E 2 from OpenAI and Imagen from Google. Both models are awesome, but they were only usable via some sort of API or not at all.

For people not familiar with these models, check the past issue of the newsletter! In a nutshell, from a text prompt the model can generate image matching the general feeling of the text prompt.

Yesterday, the group behind another impressive model called Latent Diffusion Model release the full source code along with the trained weight of their model!

This is massive for two main reason:

- The model is much more compact than the other ones, meaning it can be ran with fewer computing resource.

- It is now available to literally anyone.

This has severe implication, it means that anyone can now generate digital assets using this type of model.

This is can range from interesting to potentially dangerous socially.

Anyone can download the weight and manipulate a model trained on a large portion of the internet to generate with very low complexity digital assets that doesn't exist.

#stablediffusion text-to-image checkpoints are now available for research purposes upon request at https://t.co/7SFUVKoUdl

— Patrick Esser (@pess_r) August 11, 2022

Working on a more permissive release & inpainting checkpoints.

Soon™ coming to @runwayml for text-to-video-editing pic.twitter.com/7XVKydxTeD

Apart from the obvious devaluation of digital art as a whole, the potential to create "fake real image" just skyrocketed.

Figuring out if something on the internet is real or not will now become much more complicated if anyone with basic prompt-engineering knowledge can do something like the above.

What I think will happen next is that we will start seeing more of these digitally manipulated images and that there will be more talk about the ethics of these models released publicly.

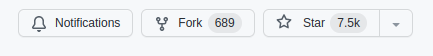

However, the pandora box is already opened. 7.5K people starred the repository already and more than 600 people forked the repository:

If you look at the LICENSE of the model, you can already see that the potential for the negative impact of this technology is in the back of the authors mind:

Use Restrictions

You agree not to use the Model or Derivatives of the Model:

- In any way that violates any applicable national, federal, state, local or international law or regulation;

- For the purpose of exploiting, harming or attempting to exploit or harm minors in any way;

- To generate or disseminate verifiably false information and/or content with the purpose of harming others;

- To generate or disseminate personal identifiable information that can be used to harm an individual;

- To defame, disparage or otherwise harass others;

- For fully automated decision making that adversely impacts an individual’s legal rights or otherwise creates or modifies a binding, enforceable obligation;

- For any use intended to or which has the effect of discriminating against or harming individuals or groups based on online or offline social behavior or known or predicted personal or personality characteristics;

- To exploit any of the vulnerabilities of a specific group of persons based on their age, social, physical or mental characteristics, in order to materially distort the behavior of a person pertaining to that group in a manner that causes or is likely to cause that person or another person physical or psychological harm;

- For any use intended to or which has the effect of discriminating against individuals or groups based on legally protected characteristics or categories;

- To provide medical advice and medical results interpretation;

- To generate or disseminate information for the purpose to be used for administration of justice, law enforcement, immigration or asylum processes, such as predicting an individual will commit fraud/crime commitment (e.g. by text profiling, drawing causal relationships between assertions made in documents, indiscriminate and arbitrarily-targeted use).

I never saw such a copyleft LICENSE before that back itself so much on the legal side of the usage of the technology.

Nothing much more to add here other than the rest of 2022 will most likely be pretty wild on that front!